I often say that a day in which nothing new is learnt is a day wasted. Today will not be wasted.

One of the good things about the Borland Database Engine (BDE) is that it has an 'autoincrement' type; I would use a field of this type to provide primary keys for almost all database tables.

Firebird (FB) per se does not have an autoincrement type, which at first was a setback when I first started developing for this database manager. I use an oldish version of "

EMS SQL Manager 2005 for InterBase & Firebird Lite" to create the databases, and this allows one to define fields as autoincrements. Under the hood, this program is creating two entities connected to the autoincrement field: a generator and a trigger. The generator starts with a seed value and whenever it is called, it increments the seed and returns the new seed; thus every call to the generator is guaranteed to get a value which does not exist in the database. Since I have been porting applications to FB, I have been calling the generator manually and getting the new key value.

Until now I have ignored the trigger which is also defined. Today I was defining a new database and decided to look at the trigger:

CREATE TRIGGER BI_PEOPLE_ID FOR PEOPLE

ACTIVE BEFORE INSERT

POSITION 0

AS

BEGIN

IF (NEW.ID IS NULL) THEN

NEW.ID = GEN_ID(PEOPLE_ID_GEN, 1);

END;

Turned into English, whenever a program is about to enter (insert) new data into the 'people' table, if the value of the 'id' field is null then the trigger will automatically call the generator and obtain the new value. This means that I don't have to call the generator manually which means that I can simplify my program code.

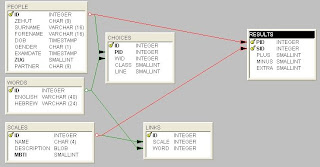

Almost all the programs I write for my occupational psychologist read in a data file which has been prepared by an 'exam' program run by an examinee; these data files' contents can be divided into two parts, where the first part is the examinee's personal data and the second part is the examinee's results.

The first part will go into the 'people' table, and I will need the id number of the inserted tuple in order to use it as a foreign key during the insertion of the 'results' tuple. Thus it's more efficient to call the generator manually for the 'people' table before inserting the data as I can save the value for later. But as the 'results' table has an autoincrement field as its primary key, I don't need to call the generator manually for this as I don't need the resulting value for anything other than as a primary key for the current tuple.

To give an explicit example, if the 'results' table has the structure

id: autoincrement (primary key)

pid: longint (foreign key, points to person whose results these are)

subject: longint

score: smallint

Then instead of writing queries like this

insert into results

values (:p1, :p2, :p3, :p4)

I can now write this query

insert into results (pid, subject, score)

values (:p2, :p3, :p4)

and have the pre-insert trigger automatically generate the value for the primary key. If the 'insert' statement does not have a list of named fields then it automatically inserts data starting with the first field in the table.

Actually, I know that in the above situation, I could probably do away with the 'id' field, and have the primary key based on the fields 'pid' and 'subject', in which case using a trigger is beside the point.

Unfortunately, it seems that I will be able to use the trigger method (as opposed to calling the generator manually) infrequently, and then only for tables whose values are entered programmatically. Many tables are populated by data entry screens, where the database table is buffered by a client dataset, and in such cases it is impossible to get the trigger to fire, as a 'field requires value' message appears.

What is important about today's efforts is that I am thinking how to use the dbExpress/Firebird combination in the most efficient manner. This is all still relatively new material to me, whereas the BDE code has had over a decade to settle and improve.